How AI is Transforming the Software Development Lifecycle (SDLC)

Imagine if every step of building software had a smart assistant helping out - That future is quickly becoming a reality. The Software Development Lifecycle (SDLC) – from initial planning all the way to monitoring a live application – is undergoing a dramatic shift thanks to artificial intelligence.

Traditional development relied solely on human effort and hindsight, but AI-driven SDLC brings automation, predictive insights, and self-learning capabilities into the mix. This means faster delivery, higher quality, and more proactive management of software.

In this blog post, we’ll walk through each phase of the SDLC (Plan, Code, Build, Test, Release, Deploy, Operate, Monitor) to see how AI is changing the game at every step.

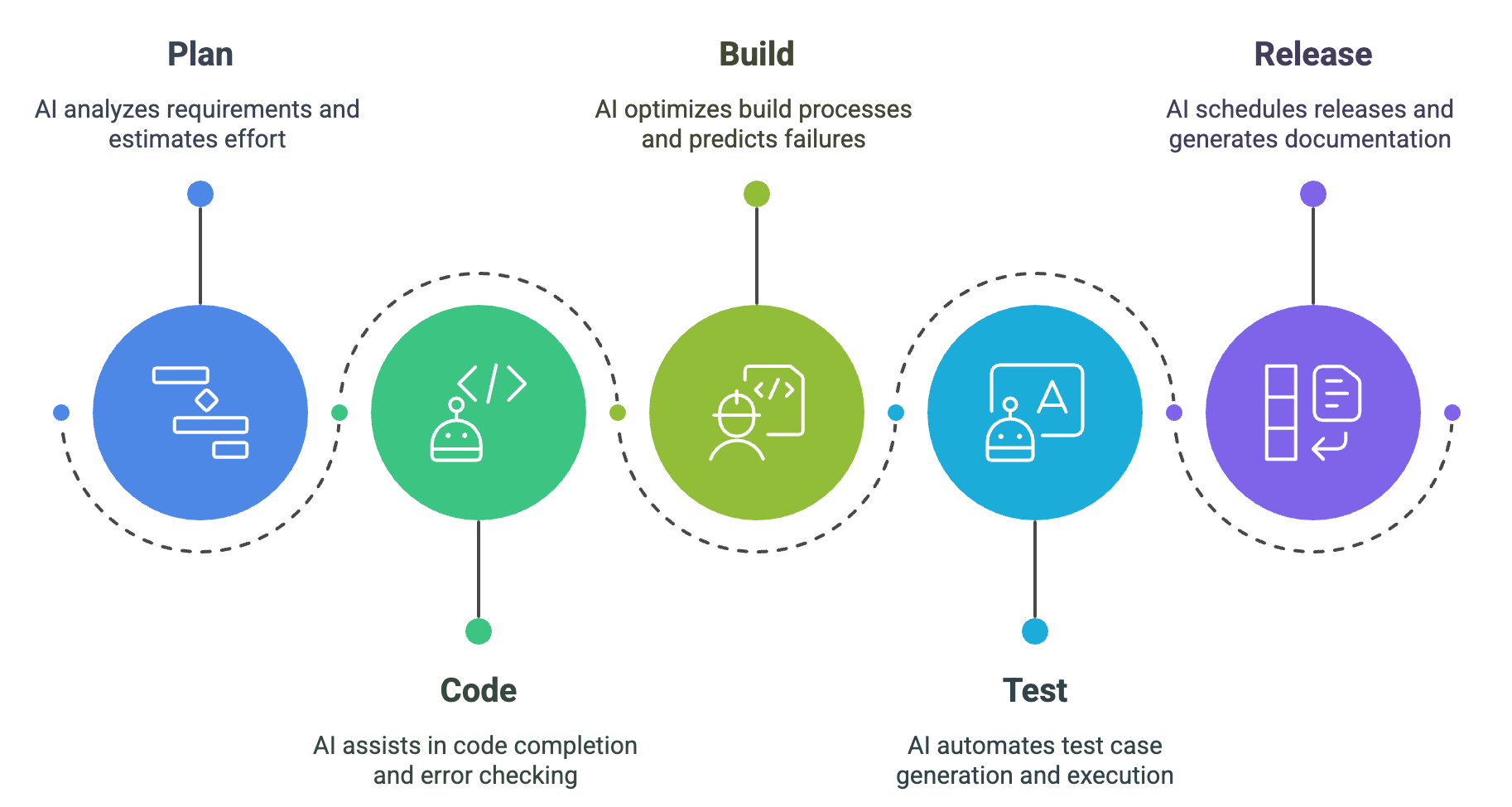

Plan

The planning phase sets the foundation for a successful project. It’s where ideas turn into requirements, timelines, and resources. Traditionally, planning relied on experience and guesswork, but AI is now supercharging this phase with data-driven insights and automation. In an AI-driven planning phase, software teams get a clearer roadmap with less uncertainty from the start. Key AI opportunities in Plan include:

- Intelligent Requirements Analysis: AI tools can interpret and organize project requirements. For example, given a bunch of brainstorming notes or user stories, an AI can summarize them, group related ideas, and even spot missing pieces. This helps ensure no critical requirement slips through the cracks early on.

- Effort Estimation & Roadmapping: Instead of purely guessing timelines, AI can analyze historical project data to estimate how long tasks might take. It looks at past velocity and similar projects to predict a realistic schedule. All stakeholders get more reliable timelines, and project managers get help building detailed roadmaps with milestones that make sense.

- Risk Identification: Planning is also about foreseeing challenges. AI systems excel at scanning past projects and industry data to flag potential risks. For instance, an AI might warn that “integration with XYZ API has caused delays in similar projects” or highlight compliance requirements early. This lets the team proactively plan around pitfalls.

- Resource Allocation: Deciding who should do what and how to allocate budgets can be simplified by AI. Intelligent schedulers can suggest the optimal team makeup and resource distribution for a project. They balance workloads, identify if you’ll need extra developers or specialists, and adjust timelines dynamically. This means more efficient use of your talent and funds from day one.

In short, AI in the planning phase helps organizations start projects on the right foot. It converts high-level ideas into actionable plans, backed by data. For a stakeholder, this means greater confidence that software initiatives are feasible and aligned with business goals before heavy coding even begins.

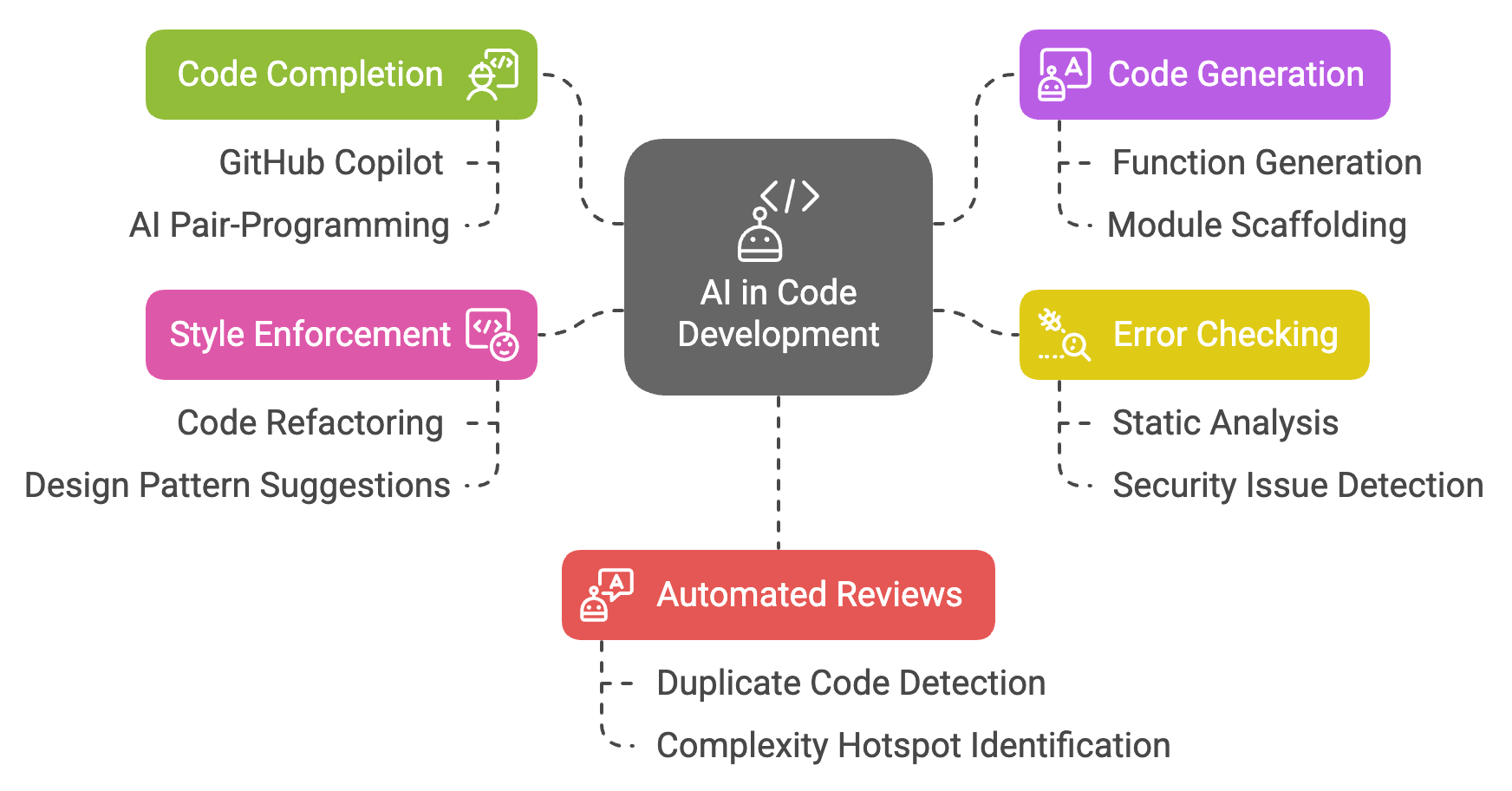

Code

The coding phase is where developers turn plans into reality by writing software. It’s traditionally the most labor-intensive step – lots of typing and debugging. Enter AI, the developer’s new coding partner. AI in the coding phase acts like an on-demand assistant, speeding up development while helping maintain quality. Here’s how AI is transforming Code:

- AI-Assisted Code Completion: One of the most visible impacts of AI is in auto-completing code and suggesting snippets as developers type. Tools like GitHub Copilot and various AI pair-programming assistants can anticipate your needs – generating boilerplate code, function templates, or even entire sections based on a comment or a function name. This accelerates the writing of code significantly, letting developers focus on logic and design rather than rote typing.

- Code Generation: Beyond just completing what you started typing, modern generative AI can create code from scratch based on descriptions. For example, a developer can ask, “Generate a function to sort a list of orders by date,” and the AI will provide a candidate implementation. This is like having a junior programmer who works at lightning speed. It’s especially handy for repetitive tasks or scaffolding new modules.

- Real-time Error Checking and Bug Hunting: AI doesn’t wait until testing to catch errors. Integrated into code editors, AI can spot potential bugs or security issues in real time. Maybe you used a variable that might be null – the AI can warn you before you even run the code. These AI-powered static analysis tools go beyond simple linters; they learn from countless codebases to detect subtle issues and “code smells” that a human might miss during an initial write-up.

- Style and Best-Practice Enforcement: Keeping a consistent coding style and following best practices is easier with AI watching. AI coding assistants can automatically refactor code to be cleaner and more efficient, suggest better naming conventions, or recommend using a known design pattern when they recognize a familiar scenario. This ensures the codebase stays high-quality and maintainable, which is a long-term win for the team.

- Automated Code Reviews: In many teams, peer code review is a critical step. AI can help here by reviewing code changes before humans do. It can provide an initial pass – pointing out duplicate code, complexity hotspots, or possible logic issues. This speeds up the review cycle and allows human reviewers to focus on more complex, nuanced feedback.

For developers, these AI-driven tools mean less time on grunt work and fewer simple mistakes. For engineering managers and execs, it means development cycles can be faster and produce more reliable code. However, it’s worth noting that AI suggestions aren’t infallible – developers still need to guide and correct the AI. (We’ll talk more about such challenges later.) Overall, coding with AI is like coding with a smart sidekick: one that boosts productivity and even helps educate the team by sharing best practices on the fly.

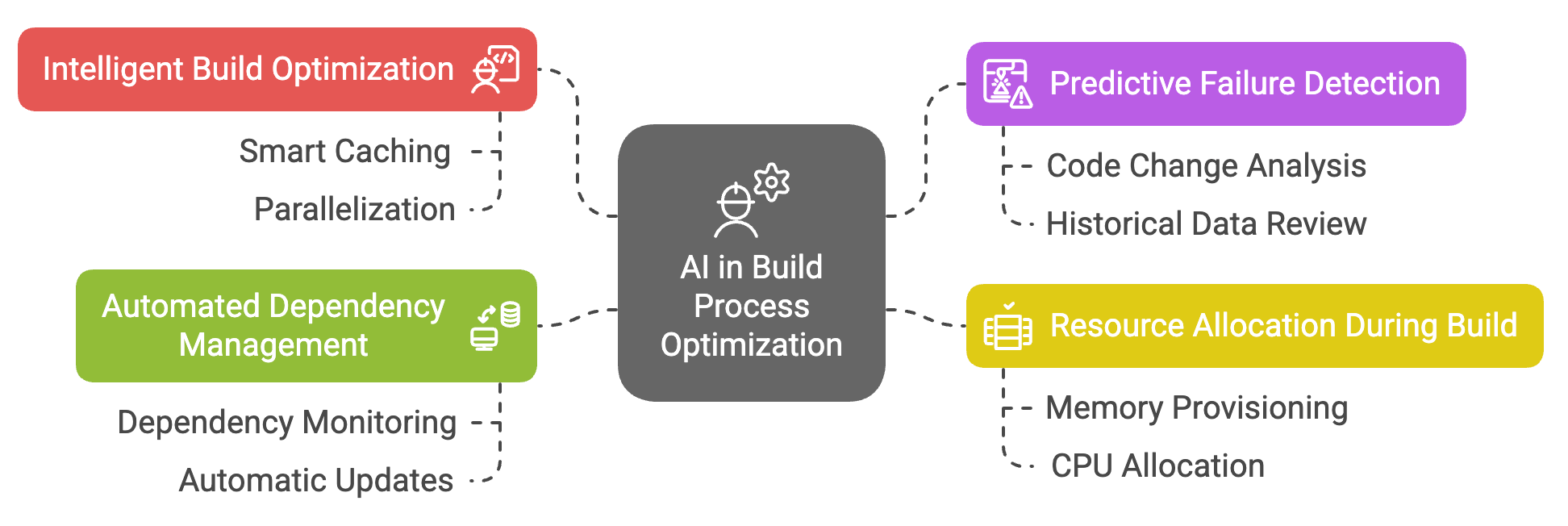

Build

Once code is written, it’s time to build – compiling the code, resolving dependencies, and preparing artifacts that can be run or deployed. In a modern continuous integration (CI) setup, builds happen frequently and can be complex. AI is stepping in to optimize the Build phase by making these processes faster and more efficient, which in turn shortens the feedback loop for developers. Key innovations in this stage include:

- Intelligent Build Optimization: AI can analyze past build processes to figure out which steps take the longest or frequently fail, and then optimize them. For instance, if certain modules haven’t changed, an AI-driven system might skip rebuilding those components (smart caching), saving time. It can also parallelize tasks in clever ways. Essentially, the build becomes smarter – doing more work only when needed and avoiding redundant tasks.

- Predictive Failure Detection: Nothing is more frustrating than waiting for a long build only for it to fail at the end. AI helps by predicting build failures early. By looking at the latest code changes and historical data, an AI system might say, “This change touches the authentication module, which often causes integration test failures – let’s run those tests first.” If a problem is likely, it can catch it in minutes rather than hours. This predictive scheduling means less wasted time on broken builds.

- Resource Allocation During Build: Build processes consume computing resources (CPU, memory, etc.), especially when compiling large projects or running many tests. AI can dynamically allocate resources in the build environment to where they’re needed most. For example, if it detects the database migration step is memory-intensive, it can provision extra memory for that step. This ensures the build runs as efficiently as possible on the available infrastructure, avoiding bottlenecks.

- Automated Dependency Management: Modern software often relies on dozens of libraries and packages. Keeping those up to date and compatible is part of the build’s job. AI tools can monitor dependencies and even automatically update or patch them if a new version is faster or more secure – all while checking that the update won’t break the build. This proactive dependency management keeps the software secure and up-to-date with minimal manual effort.

For teams, an AI-optimized build phase means faster turnaround from “code written” to “code tested and ready”. Developers spend less time twiddling their thumbs waiting for builds, and more time actually building features. Business benefit from these efficiencies as well: faster builds contribute to quicker releases, allowing the business to be more agile and respond to market needs in near real-time.

Test

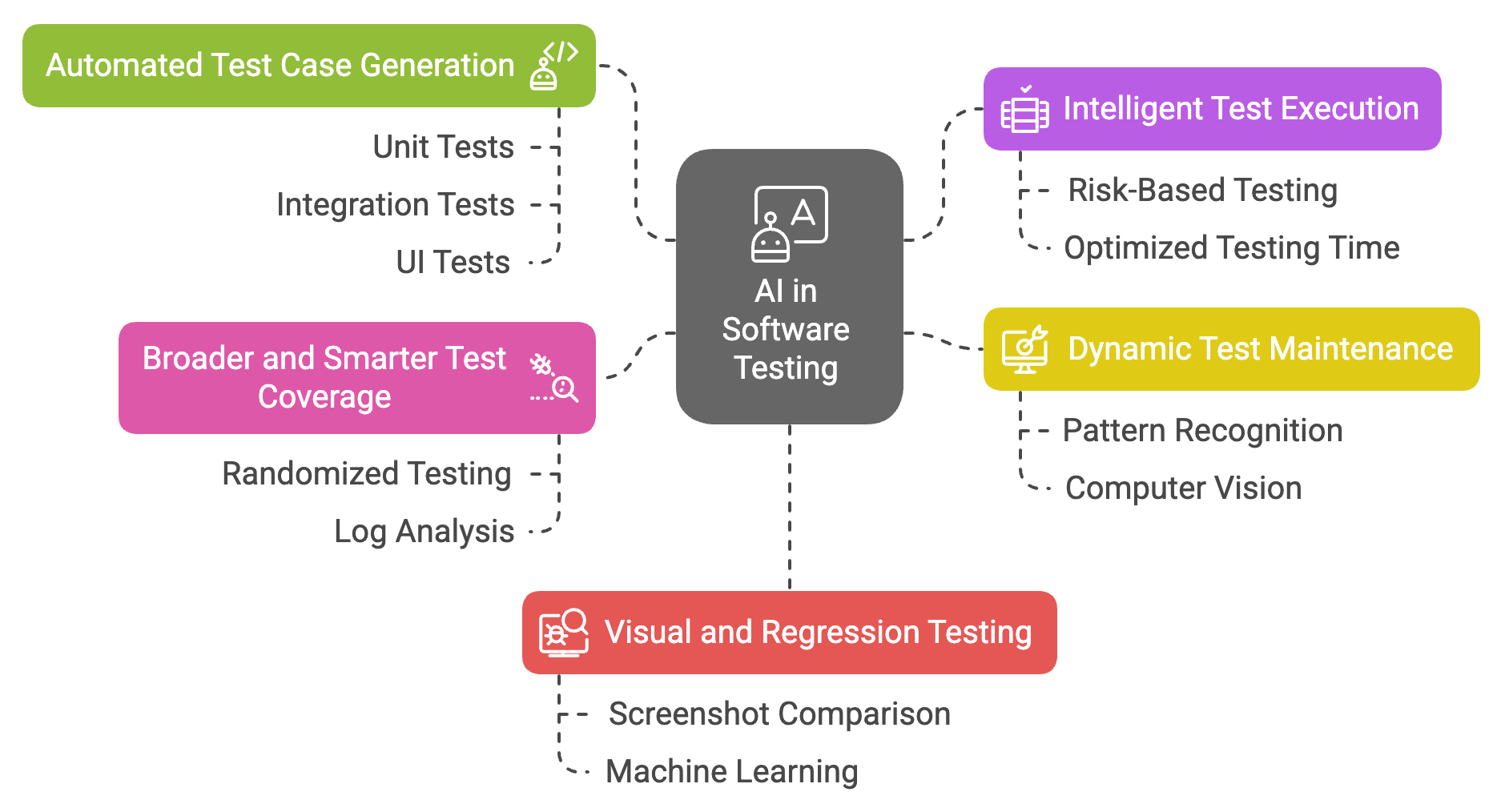

Testing is the quality gate of the SDLC – it ensures that the code does what it’s supposed to and nothing it’s not supposed to. It’s a phase that can never be skipped, but it can be incredibly time-consuming. AI is revolutionizing the Test phase by bringing automation and intelligence that go far beyond traditional scripted tests. Here’s how AI makes testing faster, smarter, and more thorough:

- Automated Test Case Generation: One of the coolest abilities of AI in testing is automatically writing test cases. Given code or even just requirements, AI tools can generate unit tests, integration tests, and even UI tests. For example, an AI might analyze a function and suggest a suite of unit tests covering various edge cases. This means QA engineers and developers get a huge head start on testing – the mundane tests are created for them, so they can focus on refining important scenarios.

- Intelligent Test Execution: It’s not just writing tests – AI can also decide which tests to run and when. In a large system, running the entire test suite can take hours. AI-driven testing platforms can prioritize tests likely to catch new bugs based on the recent code changes. If you only modified the payment processing module, the AI might run tests related to payments first (or exclusively), speeding up feedback. This risk-based testing ensures critical paths are verified ASAP, and it optimizes use of testing time.

- Dynamic Test Maintenance: As software evolves, tests that were once valid might start failing not because of new bugs, but because the software’s behavior changed legitimately (for example, a text on a button changed from “Submit” to “Send”). AI can automatically update and maintain test scripts when such benign changes occur. It uses techniques like pattern recognition or computer vision for UI tests to adapt to new screens or workflows. This reduces the notorious maintenance burden of test suites.

- Broader and Smarter Test Coverage: AI enables testing approaches that humans alone couldn’t achieve easily. For instance, AI can perform randomized or generative testing (sometimes called fuzz testing) by creating many random inputs and seeing if the software breaks. It can simulate thousands of users doing different things in a web application to find concurrency issues. It can even analyze application logs and user behavior in production to design new test cases that mirror real-world usage patterns. The result is a much broader net to catch bugs, including edge cases we might not think of ourselves.

- Visual and Regression Testing: For front-end heavy applications, AI-based testing tools can visually inspect the UI and catch visual bugs or regressions (like a button that shifted out of place or a color that’s now hard to read) by comparing screenshots intelligently. They use machine learning to differentiate between significant changes and trivial differences (like a minor pixel shift), alerting the team only when something truly needs attention.

Overall, AI in testing means higher quality software delivered faster. Many tedious testing tasks are handled automatically, and the clever prioritization means critical issues are caught early. This translates to more reliable releases and happier customers (since fewer bugs slip into production). For developers and QA, it’s like having an army of tireless test engineers ensuring nothing was overlooked.

Release

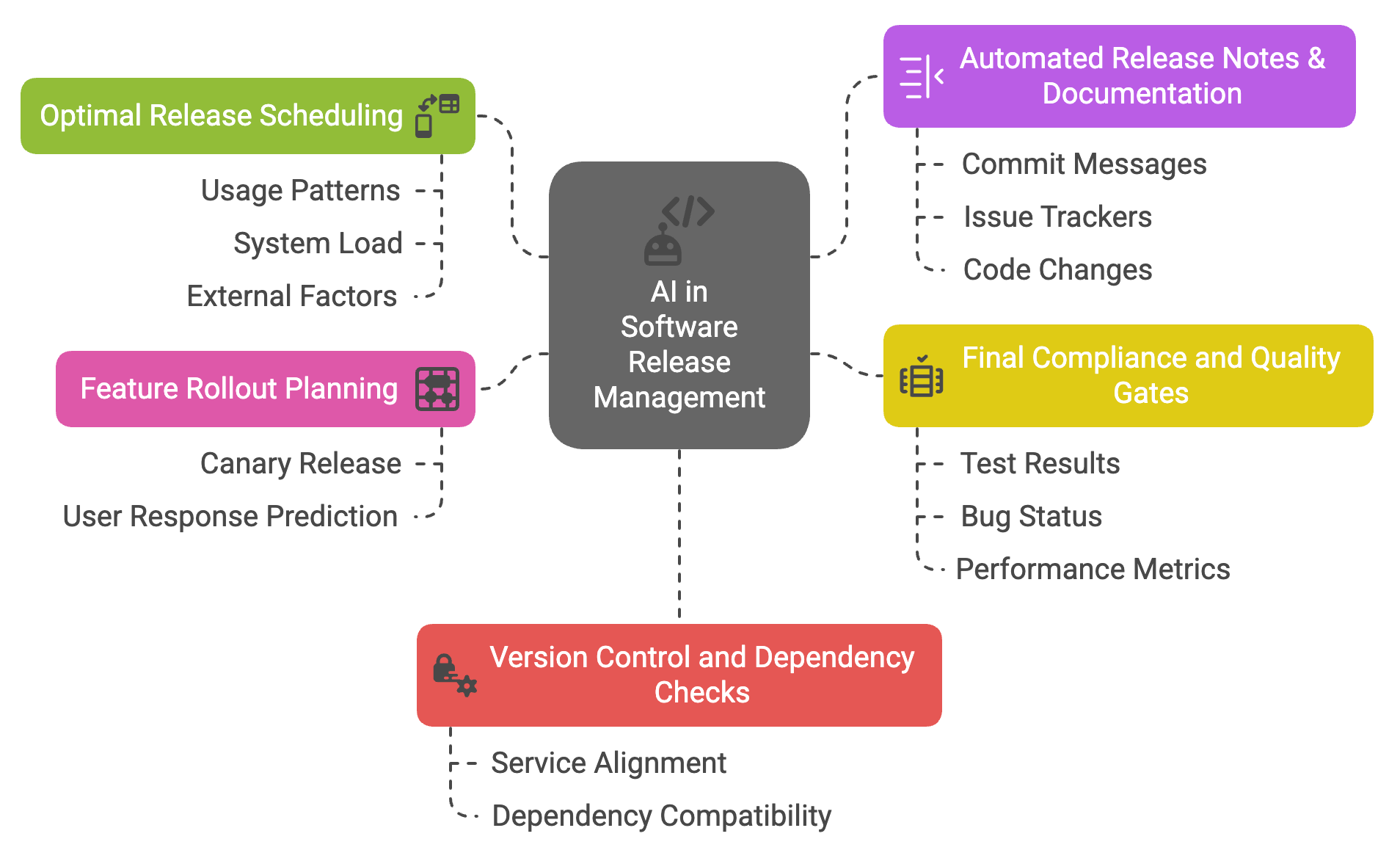

The release phase is all about getting the tested software packaged and ready for deployment, and doing the final checks before it goes live. In a continuous delivery setup, this can happen frequently and must be done correctly each time. AI contributes to Release management by ensuring that this phase is as smooth and safe as possible. Here’s how AI assists when it’s time to push the big red “Release” button:

- Optimal Release Scheduling: Deciding when to release can be a science in itself. AI can analyze application usage patterns, system load, and even external factors (like time zones or peak user activity times) to recommend the best timing for a release. Perhaps it learns that Fridays at 3 AM have the lowest user activity, suggesting that as the ideal release window to minimize impact. This kind of predictive insight helps operations teams release with confidence that users won’t be disrupted.

- Automated Release Notes & Documentation: Preparing release notes for stakeholders and users is a task AI can simplify. By scanning commit messages, issue trackers, and code changes, AI can generate draft release notes that summarize new features, improvements, and bug fixes in plain language. This ensures nothing is forgotten in the announcement and saves product managers time. Everyone will get a clear insight into what each release contains without manually compiling the info.

- Final Compliance and Quality Gates: Before a release is approved, certain conditions should be met – all tests green, no critical bugs open, performance within acceptable range, etc. AI-driven release gates can automatically verify these criteria. For example, it might check that code coverage stayed above a threshold or that no new security vulnerabilities were introduced in this release. If something’s off, the AI will put the release on hold and alert the team, preventing risky deployments.

- Feature Rollout Planning: AI can assist in planning how features are rolled out to users. For instance, it might suggest doing a canary release (releasing to a small percentage of users first) for a particularly impactful feature, based on its assessment of risk. It could also predict user response by comparing with similar past feature rollouts. This helps business leaders make data-driven decisions on whether to launch a feature to everyone or gradually ramp up.

- Version Control and Dependency Checks: Releasing a new version often means updating many components and libraries. AI tools can ensure compatibility by checking that all services and dependencies align with the new version. If one microservice is not updated and could break with the new release, the AI will flag it. This holistic oversight reduces those “oh no, we forgot to update X” moments during a release.

In essence, AI makes the release phase more of a calculated, trackable exercise than a leap of faith. It provides an extra set of eyes (and a very analytical brain) to double-check everything. That leads to smoother releases, less last-minute scrambling, and a higher degree of trust in each deployment. This means that the software delivery process becomes predictably reliable – a competitive advantage when you can ship updates rapidly without compromising stability.

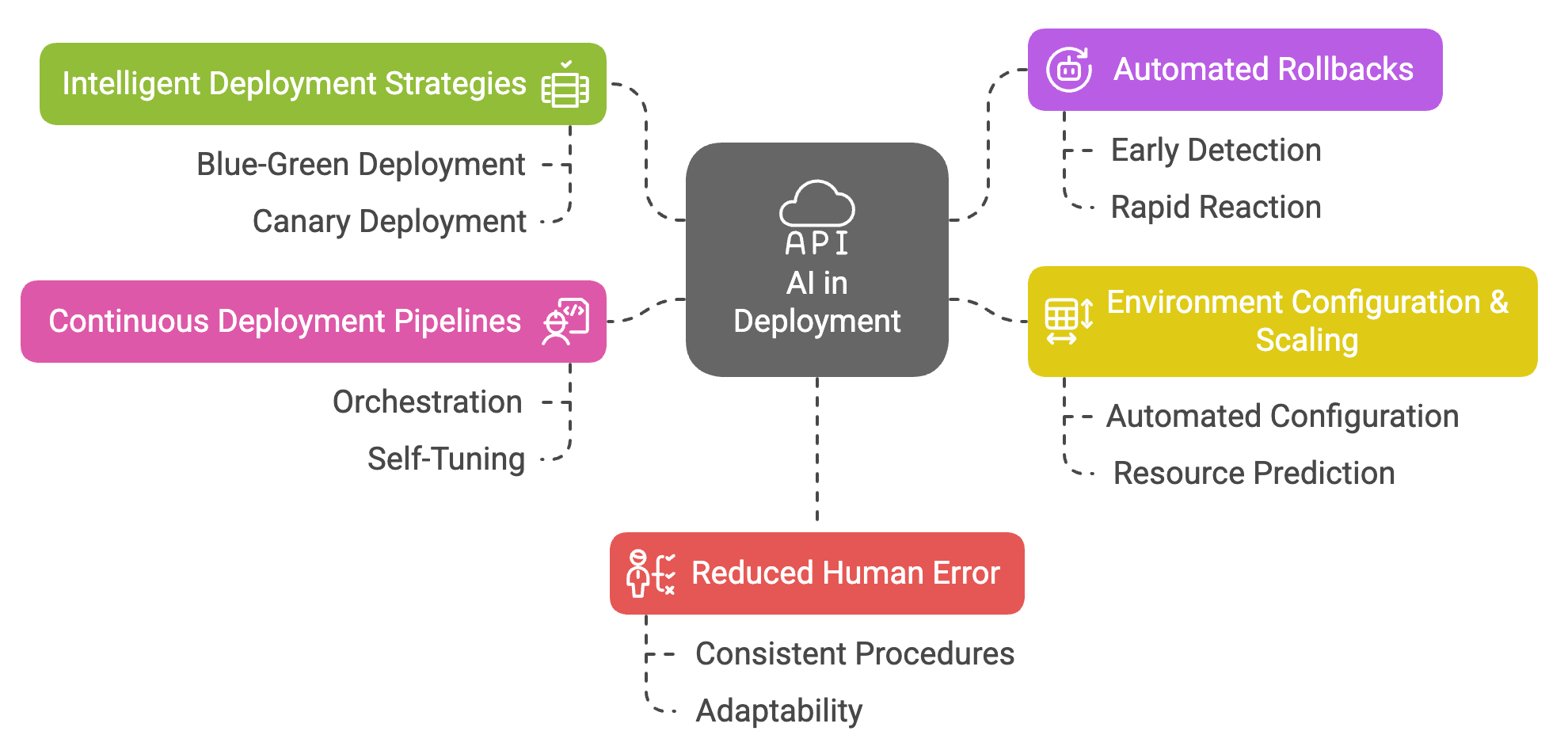

Deploy

Deployment is the act of taking the released package and rolling it out to the production environment (or staging, etc.). This phase is critical because even a perfectly tested release can go wrong if deployment is done poorly. AI is making Deploy operations more autonomous and foolproof. Think of AI in deployment as an auto-pilot ensuring new code is launched with minimal risk and downtime. Here’s how it helps:

- Intelligent Deployment Strategies: AI systems can choose and manage sophisticated deployment strategies automatically. For example, it can decide between a blue-green deployment or a canary deployment for a given release by analyzing how sensitive the update is. If the AI knows a change is minor, it might do a quick rolling update across servers. If it’s a major overhaul, it could start with a small slice of users (canary) and monitor results before a full rollout. These decisions are made by crunching lots of data on past deployments and current conditions.

- Automated Rollbacks: Even with all precautions, sometimes a deployment doesn’t go well – maybe a hidden bug surfaces or performance dips. AI can detect these early signs of trouble (through monitoring data) and trigger an automatic rollback to the previous stable version before many users even notice. This rapid reaction can save the day, avoiding prolonged outages or major incidents. It’s like having a safety net that reacts in seconds if something isn’t right.

- Environment Configuration & Scaling: Deploying software isn’t just copying files; it often involves setting up servers, config files, databases, etc. AI can automate environment configurations, ensuring that the new version gets the right settings and resources. Additionally, AI can predict if the new deployment will need more resources (say the new feature might spike CPU usage) and pre-provision extra servers or cloud instances. This means when you deploy, the infrastructure is already prepared to handle it – no scrambling after the fact.

- Continuous Deployment Pipelines: In organizations practicing continuous deployment, code is pushed live very frequently. AI keeps these pipelines running smoothly by orchestrating all the moving parts. It manages containerization, network settings, and service orchestrators (like Kubernetes) with learned intelligence – for example, adjusting the rate of deployments if it senses the system is getting overwhelmed. Essentially, AI ensures the deployment pipeline itself is self-tuning and efficient.

- Reduced Human Error: Deployment often involves many steps and checks, which can be prone to human error (misconfiguring a load balancer, deploying in the wrong region, etc.). By automating deployments with AI, you minimize manual steps. The AI follows tested scripts and procedures every time, but also adapts when needed. This consistency leads to more reliable deployments across the board.

With AI-driven deployments, companies achieve the holy grail of “push-button deployments” where new releases go live quickly and safely. For developers and DevOps teams, it removes a lot of late-night stress during release nights. For the business, it means features and fixes reach customers faster, and the deployment process scales as the company grows – without a proportional increase in complexity or risk.

Operate

After deployment, the software enters the Operate phase – it’s live, serving users, and needs to be kept running smoothly. This is where IT operations (and DevOps engineers) traditionally monitor the system, handle incidents, and perform maintenance. AI is truly transformative here, ushering in the era of self-healing infrastructure and smart operations, often termed AIOps (Artificial Intelligence for IT Operations). Here’s how AI upgrades the operations of software:

- Anomaly Detection & Self-Healing: AI systems excel at detecting anomalies – those weird patterns or deviations that might indicate a problem. In operation, AI continuously watches metrics (CPU, memory, response times, error rates) and can spot trouble before it escalates. For instance, if a service’s response time suddenly spikes or a server’s memory usage starts climbing abnormally, the AI flags it. But it doesn’t stop at detection; it can also take action. A classic example is self-healing: if an application instance crashes or a memory leak is detected, an AI-driven system could automatically restart the service or shift traffic to healthy servers, essentially fixing issues on the fly without waiting for human intervention.

- Auto-Scaling and Resource Optimization: Keeping systems running well means having the right amount of resources at the right time. AI algorithms can predict usage patterns (say, a daily traffic spike at 8 PM) and scale infrastructure proactively. They might spin up extra server instances just before the spike hits, then spin them down after, ensuring users always get a smooth experience without over-provisioning resources. This dynamic scaling saves cost while maintaining performance.

- Incident Response and Resolution: When something does go wrong that isn’t auto-fixed, AI can still help responders. AI-driven incident management systems can cross-analyze data from various sources (logs, alerts, user reports) to pinpoint the likely root cause of an incident much faster than a human team combing through logs manually. For example, if an application is down, the AI might correlate it with a recent configuration change on a database and suggest that as the culprit. It can even recommend solutions (“Revert the config to previous settings”) based on what’s worked in the past. This drastically cuts down mean time to repair when outages occur.

- Proactive Maintenance: Instead of reacting to problems, AI enables a proactive stance. Consider something like database performance: AI can notice subtle trends, such as a query getting slower over weeks, and alert the team to optimize it before it becomes a serious issue. Similarly, for hardware or cloud resources, AI might predict that a server is likely to fail soon (perhaps by analyzing system logs or SMART metrics on a drive) so the team can replace it in a planned way. This predictive maintenance avoids sudden downtime.

- Operational Insights for Improvement: Beyond keeping the lights on, AI in operations also provides insights for continuous improvement. It can identify patterns like “Every Tuesday our response time degrades due to a backup job – maybe reschedule it,” or “This new feature is causing more errors, perhaps it needs refinement.” Such insights can be fed back to the development and planning teams to make the next cycle of development better. This is how AI helps break silos between ops and dev – by turning operational data into actionable feedback.

AI-powered operations mean your services achieve higher uptime and more consistent performance, often with a leaner ops team. It’s like having a 24/7 operations genius on staff, always learning and reacting instantly. It also frees up human engineers from firefighting mundane issues, allowing them to focus on strategic improvements. In short, AI in the operate phase helps a company deliver on those reliability promises to customers, maintaining trust and satisfaction.

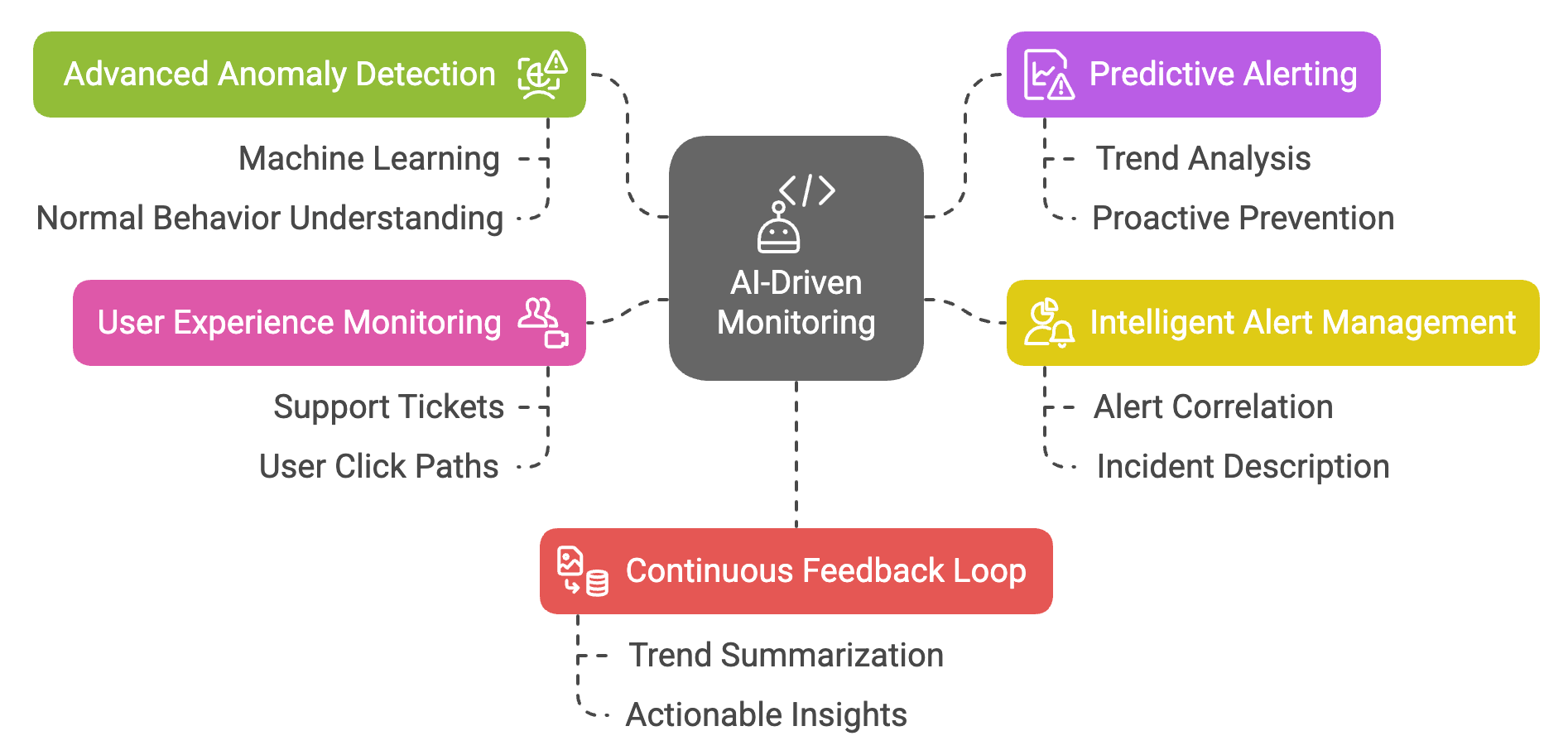

Monitor

Monitoring goes hand-in-hand with operations, but it’s worth separating because of how critical data and feedback are in the modern SDLC. The Monitor phase is about continuously observing the system’s performance, stability, and even user behavior to inform decisions. AI is elevating monitoring from simple dashboards and alerts to predictive alerting and insightful analytics. Here’s what AI brings to Monitor:

- Advanced Anomaly Detection: Traditional monitoring sets static thresholds (e.g., alert if CPU > 90%). AI-driven monitoring is far more flexible and smart. It uses machine learning to understand what “normal” looks like for each metric and service, then detects anomalies that a static threshold might miss or would catch too late. For example, an AI system might learn that 80% CPU at noon is normal for a specific service (so it won’t alert on that), but a jump to 85% at 3 AM is not normal (even if under a generic 90% threshold) and warrants an alert. This reduces noise and catches genuine issues early.

- Predictive Alerting: Going a step beyond detecting current anomalies, AI can forecast future issues. This is predictive alerting – the system essentially says, “If trends continue, we anticipate a problem soon.” For instance, it might observe memory usage climbing steadily release after release and project that in two days the application will exhaust memory and crash. It then alerts the team before the crash happens, giving a window to act (like restarting the service or increasing memory) to prevent any outage. This moves IT from reactive firefighting to proactive prevention.

- Intelligent Alert Management: AI helps not only in detecting issues but also in managing the flood of alerts that big systems generate. It can correlate related alerts and group them into one incident. If five different monitoring tools each fire off an alert, the AI can realize all are symptoms of one root cause and create a single, consolidated alert with a clear incident description. This means on-call engineers get one clear notification (“Service X is experiencing a database latency issue affecting multiple features”) instead of five confusing pings. Less noise, more signal.

- User Experience Monitoring: AI-driven monitoring isn’t limited to system metrics. It can also watch user interactions and feedback. For example, AI might analyze support tickets or social media to detect if users are unhappy about a new update, flagging a potential issue that pure technical monitors didn’t catch. It could also track user click paths and find anomalies (like many users unexpectedly abandoning a process midway, which could indicate a hidden bug). By watching both machines and humans, AI gives a 360° view of the software’s health.

- Continuous Feedback Loop: All the data collected in monitoring can feed back into improving the product. AI can summarize and present trends to development teams: maybe a specific feature is rarely used (so it might not need as many resources), or response times in a certain region are gradually worsening (time to bolster infrastructure there). In this way, the monitor phase, enhanced by AI, closes the loop with the plan phase – turning real-world data into actionable insights for the next iteration of the software.

For stakeholders, AI-powered monitoring provides peace of mind and the ability to have dashboards that accurately reflect system health in real-time, and even an idea of future risks. Developers and ops get a safety net; they can trust that if anything goes awry, they’ll know immediately (or even beforehand). Monitoring with AI is like having an ever-vigilant guardian for your software, one that not only watches and warns but learns and advises.

Challenges and Risks of AI Integration

AI offers exciting benefits in every SDLC phase, but it’s not a magic wand. There are important challenges and risks to consider when integrating AI into software development processes. Being aware of these helps in strategizing AI adoption smartly (so you harness the benefits without falling victim to the pitfalls). Here are some key challenges and risks:

- Quality of AI-Generated Code: AI suggestions are not guaranteed to be 100% correct or optimal. In fact, some studies have shown that developers using AI coding assistants introduced more bugs than those coding manually in certain scenarios. For example, if a developer trusts every autocomplete suggestion blindly, they might end up with logic errors or security flaws that wouldn’t have happened otherwise. The lesson is that AI-written code still needs human review and testing. Developers must treat AI as a helper, not an infallible oracle.

- Overreliance and Skill Erosion: If teams become too dependent on AI to do the heavy lifting, there’s a risk that human skills atrophy. It’s similar to how relying on GPS for every drive might weaken your own navigation skills. Over time, developers might get less practice in debugging or problem-solving if they always defer to AI solutions. This can be mitigated by using AI to augment human decision-making, not replace it – ensuring team members still stay sharp and in control of critical thinking.

- False Sense of Security: AI in testing and operations can catch a lot, but it might lead to a false sense of security. Teams might skip writing certain tests thinking the AI will handle it, or ops engineers might not build manual monitoring checks assuming the AI has it covered. If the AI model has blind spots, those issues could slip through. In other words, AI can miss things too – maybe an unusual edge case or a novel type of attack that it wasn’t trained on. It’s crucial to use AI as an enhancement to good practices, not a replacement for them.

- Bias and Data Quality Issues: AI systems are only as good as the data and training they’re built on. If an AI tool was trained on code that contains biases or bad practices, it can perpetuate those. For instance, an AI might suggest solutions that are fine for typical use cases but fail for underserved inputs, because it never “saw” those in training. There’s also the concern of bias in predictive systems – if past incidents were handled in a certain way, the AI might always assume that pattern, even if it’s not the best approach in a new context. Ensuring diversity and quality in the training data and having humans oversee AI decisions can help alleviate this.

- Security and Intellectual Property: Using AI that was trained on public code (like some coding assistants) raises questions of security and IP. Could the AI inadvertently suggest code that has a license your company can’t use? Or could it expose sensitive logic? There’s also a risk that AI may introduce subtle security vulnerabilities if it suggests a snippet with a known exploit (perhaps because that snippet was common in its training data). Companies need to set policies for AI usage – for example, reviewing any AI-generated code for security, and using self-hosted or privacy-aware AI tools when working with proprietary code.

- Integration and Tooling Challenges: Introducing AI into existing workflows isn’t always plug-and-play. There can be a steep learning curve for the team to trust and effectively use the new AI tools. Some legacy systems might not easily integrate with modern AI APIs or platforms. Additionally, AI tools may produce outputs that need new kinds of handling (for example, a test generator that creates hundreds of tests might overwhelm your test runner if not managed). Organizations should be prepared to invest time in training the team and possibly adjusting processes to smoothly blend AI into the mix.

- Cost and Maintenance: While many AI tools promise efficiency, they can also add costs – whether it’s licensing an AI service or the compute cost of running AI models continuously. Moreover, the AI models or rules themselves may require maintenance and tuning. For instance, an anomaly detection system might need tweaking as your application changes over time. It’s important to weigh the ROI: ensure that the gains from AI automation outweigh the overhead and that there’s a plan to maintain the AI (just like you maintain software).

By acknowledging these challenges, we can set realistic expectations and governance around AI. It ensures that the team remains vigilant and uses AI appropriately. Remember, AI integration is a journey – it might not deliver perfect results on day one, but with careful oversight, it learns and improves. The goal is to let AI handle the repetitive or highly complex analysis tasks while humans steer the ship with insight and creativity. Striking that balance is key to reaping AI’s benefits without stumbling over its limitations.

Conclusion

AI is reshaping the software development lifecycle from end to end. As we saw, every phase – Plan, Code, Build, Test, Release, Deploy, Operate, Monitor – has concrete opportunities where artificial intelligence can save time, reduce errors, and provide richer insights. This means software projects can be delivered faster and run more reliably, giving your organization a competitive edge. For developers and engineers, AI is like a capable teammate that handles the boring and the brain-bending tasks alike, freeing you to focus on creativity and complex problem-solving.

Embracing AI across the SDLC is becoming less of an option and more of a necessity in the industry. Companies that strategically adopt these AI tools and practices stand to accelerate their development velocity while improving quality and uptime. Of course, it’s important to adopt AI thoughtfully – keeping humans in the loop to guide the intelligence and manage the risks. When done right, an AI-augmented SDLC leads to a more agile, efficient, and innovative software organization.

AI won’t replace developers or IT teams; but developers and teams who leverage AI will likely outperform those who don’t. The software development lifecycle is evolving, and AI is now an integral part of that evolution – transforming how we plan, build, and maintain software in the smartest way possible.

Comments ()